🎉 New Research Published at DIMVA 2025

I'm excited to announce that "Quantifying and Mitigating the Impact of Obfuscations on Machine-Learning-Based Decompilation Improvement" has been published at the 2025 Conference on Detection of Intrusions and Malware & Vulnerability Assessment (DIMVA 2025)!

The Research Team

This work was primarily conducted by Deniz Bölöni-Turgut—a bright undergraduate at Cornell University—as part of the REU in Software Engineering (REUSE) program at CMU. She was supervised by Luke Dramko from our research group.

What We Investigated

This paper tackles an important question in the evolving landscape of AI-powered reverse engineering: How do code obfuscations impact the effectiveness of these ML-based approaches? In the real world, adversaries often employ obfuscation techniques to make their code harder to analyze by reverse engineers. Although these obfuscation techniques were not designed with machine learning in mind, they can significantly modify the code, which raises the question of whether they could hinder the performance of ML models, which are currently trained on unobfuscated code.

Key Findings

Our research provides important quantitative insights into how obfuscations affect ML-based decompilation:

-

Obfuscations do negatively impact ML models: We demonstrated that semantics-preserving transformations that obscure program functionality significantly reduce the accuracy of machine learning-based decompilation tools.

-

Training on obfuscated code helps: Our experiments show that training models on obfuscated code can partially recover the lost accuracy, making the tools more resilient to obfuscation techniques.

-

Consistent results across multiple models: We validated our findings across three different state-of-the-art models from the literature—DIRTY, HexT5, and VarBERT—suggesting that our findings generalize.

-

Practical implications for malware analysis: Since obfuscations are commonly used in malware, these findings are directly applicable to improving real-world binary analysis scenarios.

This work represents an important step forward in making ML-based decompilation tools more resilient against the obfuscation techniques commonly encountered in real-world binary analysis scenarios. As the field continues to evolve, understanding these vulnerabilities and developing robust solutions will be crucial for maintaining the effectiveness of AI-powered security tools.

Read More

Want to know more? Download the complete paper.

🎉 New Research Published at DSN 2025

I'm excited to announce that "A Human Study of Automatically Generated Decompiler Annotations" has been published at the 2025 IEEE/IFIP International Conference on Dependable Systems and Networks (DSN 2025)!

The Research Team

This work represents the culmination of Jeremy Lacomis's Ph.D. research, alongside our fantastic collaborators:

- Vanderbilt University: Yuwei Yang, Skyler Grandel, and Kevin Leach

- Carnegie Mellon University: Bogdan Vasilescu and Claire Le Goues

What We Studied

This paper investigates a critical question in reverse engineering: Do automatically generated variable names and type annotations actually help human analysts understand decompiled code?

Our study built upon DIRTY, our machine learning system that automatically generates meaningful variable names and type information for decompiled binaries. While DIRTY showed promising technical results, we wanted to understand its real-world impact on human reverse engineers.

Key Findings

- Surprisingly, the annotations did not significantly improve participants' task completion speed or accuracy

- This challenges assumptions about the direct correlation between code readability and task performance

- Participants preferred code with annotations over plain decompiled output

Read More

Interested in the full methodology and detailed results? Download the complete paper to dive deeper into our human study design, statistical analysis, and implications for future decompilation tools.

Right before the holidays, I, along with my co-authors of the journal article The Art, Science, and Engineering of Fuzzing: A Survey, received an early holiday present!

Congratulations!

On behalf of Vice President for Publications, David Ebert, I am writing to inform you that your paper, "The Art, Science, and Engineering of Fuzzing: A Survey," has been awarded the 2021 Best Paper Award from IEEE Transactions on Software Engineering by the IEEE Computer Society Publications Board.

This was quite unexpected, as our article was accepted back in 2019 -- four years ago! But it only "appeared" in the November 2021 editions of the journal.

You can access this article here or, as always, on my publications page.

It's been an exciting year so far. I'm happy to announce that two papers I co-authored received awards. Congratulations to the students who did all the heavy lifting -- Jeremy, Qibin, and Alex!

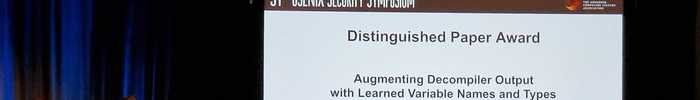

Distinguished Paper Award: Augmenting Decompiler Output with Learned Variable Names and Types

Qibin Chen, Jeremy Lacomis, Edward J. Schwartz, Claire Le Goues, Graham Neubig, and Bogdan Vasilescu. Augmenting Decompiler Output with Learned Variable Names and Types, (PDF) Proceedings of the 2022 USENIX Security Symposium. Received distinguished paper award.

This paper follows up on some of our earlier work in which we show how to improve decompiler. Decompiler output is often substantially more readable compared to the lower-level alternative of reading disassembly code. But decompiler output still has a lot of shortcomings when it comes to information that is removed during the compilation process, such as variable names and type information. In our previous work, we showed that it is possible to recover meaningful variable names by learning appropriate variable names based on the context of the surrounding code.

In the new paper, Jeremy, Qibin and my coauthors explored whether it

is also possible to recover high-level types via learning. There is a

rich history of binary analysis work in the area of type inference,

but this work generally focuses on syntactic types, such as struct

{float; float}. These type inference algorithms are generally already

built into decompilers. In our paper, we try to recover semantic

types, such as struct {float x; float y} point which includes the

type and field names, which are more valuable to a reverse engineer.

It turns out that we can recover semantic types even more accurately

than variable names. This is in part because types are constrained by

the way in which they are used. For example, an int can't be

confused with a char because they are different sizes.

Best Paper Award: Learning to Superoptimize Real-world Programs

Alex Shypula, Pengcheng Yin, Jeremy Lacomis, Claire Le Goues, Edward Schwartz, and Graham Neubig. Learning to Superoptimize Real-world Programs, (Arxiv) (PDF) Proceedings of the 2022 Deep Learning for Code Workshop at the International Conference on Learning Representations. Received best paper award.

In this paper, Alex and our co-authors investigate whether neural models are able to learn and improve on optimizations at the assembly code level by looking at unoptimized and optimized code pairings that are generated from an optimizing compiler. The short answer is that they can, and by employing reinforcement learning on top, can learn to outperform an optimizing compiler in some cases! Superoptimization is an interesting problem in its own right, but what really excites me about this paper is it demonstrates that neural models can learn very complex optimizations such as register allocation just by looking at the textual representation of assembly code. The optimizations the model can perform clearly indicate that the model is learning a substantial portion of x86 assembly code semantics merely by looking at examples. To me, this clearly signals that, with the right data, neural models are likely able to solve many binary analysis problems. I look forward to future work in which we combine traditional binary analysis techniques, such as explicit semantic decodings of instructions, with neural learning.

I'm happy to announce that a paper written with my colleagues, A Generic Technique for Automatically Finding Defense-Aware Code Reuse Attacks, will be published at ACM CCS 2020. This paper is based on some ideas I had while I was finishing my degree that I did not have time to finish. Fortunately, I was able to find time to work on it again at SEI, and this paper is the result. A pre-publication copy is available from the publications page.

Variable Recovery

As far back as I can remember, one of the accepted dogmas of reverse engineering is that when a program is compiled, some information about the program is lost, and there is no way to recover it. Variable names are one of the most frequently cited casualties of this idea. In fact, this argument is used by countless authors in the introductions of their papers to explain some of the unique challenges that binary analysis has compared to source analysis.

You can imagine how shocking (and cool!) it was then when my colleagues and I found that it's possible to recover a large percentage of variable names. Our key insight is that the semantics of the binary code that accesses a variable is actually a surprisingly good signal for what the variable was named. In other words, we took a huge amount of source code from github, compiled it, and then decompiled it. We then trained a model to predict the variable names, which we can't see in an executable, based on the way that the code accesses those variables, which we can see in an executable. In Meaningful Variable Names for Decompiled Code: A Machine Translation Approach, we showed that this was possible using Statistical Machine Translation, which is one of the techniques used to translate between natural languages.

I'm happy to announce that our latest paper on this subject, DIRE: A Neural Approach to Decompiled Identifier Renaming, was accepted to ASE 2019. In this paper, we found that neural network models work really well for recovering variable names in decompiled code. I'll post our camera ready as soon as its finished.

Code Reuse

I'll be speaking at the 2019 Central Pennsylvania Open Source Conference (CPOSC) in September. I attended CPOSC for the first time last year, and was very impressed by the quality of the talks. The name is a little misleading; talks are not necessarily related to open source software. I'll actually be giving a primer on code reuse attacks.

Powered with by Gatsby 5.0