I'm trying to document a few things that I do infrequently enough that I tend to forget how to do them, and need to rediscover the process each time. Next in this series is debugging the Ghidra decompiler. This is one of the only resources I know of that discusses this!

The process is roughly:

cd /path/to/ghidra/Ghidra/Features/Decompiler/src/decompile/cppmake decomp_dbg-

Inside of Ghidra, right click on the "Debug Function Decompilation" menu item and save the XML file somewhere.

- Run

SLEIGHHOME=/path/to/ghidra ./decomp_dbg. You should now be in thedecomp_dbginterpreter. - Use

restore /path/to/debug.xml - Use

load function target_func - Optionally use

trace address insn_address - Finally run

decompile

Here's a full example:

~/g/g/G/F/D/s/d/cpp $ env SLEIGHHOME=/home/ed/ghidra/ghidra_10.4_PUBLIC/ ./decomp_dbg

[decomp]> restore /tmp/mydebug.xml

/tmp/mydebug.xml successfully loaded: Intel/AMD 32-bit x86

[decomp]> load function main

Function main: 0x00411530

[decomp]> trace address 0x4115ec

OK (1 ranges)

[decomp]> decompile

Decompiling main

DEBUG 0: extrapopsetup

0x004115ec:200: **

0x004115ec:200: ESP(0x004115ec:200) = ESP(free) + #0x4

DEBUG 1: funclink

0x004115ec:a5: call fCls3:8(free)

0x004115ec:a5: EAX(0x004115ec:a5) = call fCls3:8(free)(ECX(free),u0x10000019:1(0x004115ec:212))

0x004115ec:211: **

0x004115ec:211: u0x10000015(0x004115ec:211) = ESP(free) + #0x0

0x004115ec:212: **

0x004115ec:212: u0x10000019:1(0x004115ec:212) = *(ram,u0x10000015(0x004115ec:211))

DEBUG 2: heritage

0x004115ec:248: **

0x004115ec:248: ECX(0x004115ec:248) = [create] i0x004115ec:a5:8(free)

0x004115ec:25a: **

0x004115ec:25a: EDX(0x004115ec:25a) = [create] i0x004115ec:a5:8(free)

0x004115ec:26f: **

0x004115ec:26f: CF(0x004115ec:26f) = CF(0x004115dd:96) [] i0x004115ec:a5:8(free)

0x004115ec:280: **

0x004115ec:280: PF(0x004115ec:280) = PF(0x004115dd:9e) [] i0x004115ec:a5:8(free)

0x004115ec:291: **

0x004115ec:291: ZF(0x004115ec:291) = ZF(0x004115dd:9a) [] i0x004115ec:a5:8(free)

0x004115ec:2a2: **

0x004115ec:2a2: SF(0x004115ec:2a2) = SF(0x004115dd:99) [] i0x004115ec:a5:8(free)

0x004115ec:2b3: **

0x004115ec:2b3: OF(0x004115ec:2b3) = OF(0x004115dd:97) [] i0x004115ec:a5:8(free)

0x004115ec:2c4: **

0x004115ec:2c4: EIP(0x004115ec:2c4) = EIP(0x004115c8:2c3) [] i0x004115ec:a5:8(free)

0x004115ec:a3: ESP(0x004115ec:a3) = ESP(free) - #0x4

0x004115ec:a3: ESP(0x004115ec:a3) = ESP(0x004115cd:86) - #0x4

0x004115ec:a4: *(ram,ESP(free)) = #0x4115f1

0x004115ec:a4: *(ram,ESP(0x004115ec:a3)) = #0x4115f1

0x004115ec:211: u0x10000015(0x004115ec:211) = ESP(free) + #0x0

0x004115ec:211: u0x10000015(0x004115ec:211) = ESP(0x004115ec:a3) + #0x0

0x004115ec:a5: EAX(0x004115ec:a5) = call fCls3:8(free)(ECX(free),u0x10000019:1(0x004115ec:212))

0x004115ec:a5: EAX(0x004115ec:a5) = call fCls3:8(free)(ECX(0x004115e6:a2),u0x10000019:1(0x004115ec:212))

0x004115ec:200: ESP(0x004115ec:200) = ESP(free) + #0x4

0x004115ec:200: ESP(0x004115ec:200) = ESP(0x004115ec:a3) + #0x4

DEBUG 3: deadcode

0x004115ec:248: ECX(0x004115ec:248) = [create] i0x004115ec:a5:8(free)

0x004115ec:248: **

0x004115ec:25a: EDX(0x004115ec:25a) = [create] i0x004115ec:a5:8(free)

0x004115ec:25a: **

0x004115ec:26f: CF(0x004115ec:26f) = CF(0x004115dd:96) [] i0x004115ec:a5:8(free)

0x004115ec:26f: **

0x004115ec:280: PF(0x004115ec:280) = PF(0x004115dd:9e) [] i0x004115ec:a5:8(free)

0x004115ec:280: **

0x004115ec:291: ZF(0x004115ec:291) = ZF(0x004115dd:9a) [] i0x004115ec:a5:8(free)

0x004115ec:291: **

0x004115ec:2a2: SF(0x004115ec:2a2) = SF(0x004115dd:99) [] i0x004115ec:a5:8(free)

0x004115ec:2a2: **

0x004115ec:2b3: OF(0x004115ec:2b3) = OF(0x004115dd:97) [] i0x004115ec:a5:8(free)

0x004115ec:2b3: **

0x004115ec:2c4: EIP(0x004115ec:2c4) = EIP(0x004115c8:2c3) [] i0x004115ec:a5:8(free)

0x004115ec:2c4: **

DEBUG 4: sub2add

0x004115ec:2e4: **

0x004115ec:2e4: u0x100000a0(0x004115ec:2e4) = #0x4 * #0xffffffff

0x004115ec:a3: ESP(0x004115ec:a3) = ESP(0x004115cd:86) - #0x4

0x004115ec:a3: ESP(0x004115ec:a3) = ESP(0x004115cd:86) + u0x100000a0(0x004115ec:2e4)

DEBUG 5: propagatecopy

0x004115ec:a5: EAX(0x004115ec:a5) = call fCls3:8(free)(ECX(0x004115e6:a2),u0x10000019:1(0x004115ec:212))

0x004115ec:a5: EAX(0x004115ec:a5) = call fCls3:8(free)(u0x00007a80(0x004115e6:a1),u0x10000019:1(0x004115ec:212))

DEBUG 6: identityel

0x004115ec:211: u0x10000015(0x004115ec:211) = ESP(0x004115ec:a3) + #0x0

0x004115ec:211: u0x10000015(0x004115ec:211) = ESP(0x004115ec:a3)

DEBUG 7: propagatecopy

0x004115ec:212: u0x10000019:1(0x004115ec:212) = *(ram,u0x10000015(0x004115ec:211))

0x004115ec:212: u0x10000019:1(0x004115ec:212) = *(ram,ESP(0x004115ec:a3))

DEBUG 8: collapseconstants

0x004115ec:2e4: u0x100000a0(0x004115ec:2e4) = #0x4 * #0xffffffff

0x004115ec:2e4: u0x100000a0(0x004115ec:2e4) = #0xfffffffc

DEBUG 9: propagatecopy

0x004115ec:a3: ESP(0x004115ec:a3) = ESP(0x004115cd:86) + u0x100000a0(0x004115ec:2e4)

0x004115ec:a3: ESP(0x004115ec:a3) = ESP(0x004115cd:86) + #0xfffffffc

DEBUG 10: addmultcollapse

0x004115ec:200: ESP(0x004115ec:200) = ESP(0x004115ec:a3) + #0x4

0x004115ec:200: ESP(0x004115ec:200) = ESP(0x004115cd:86) + #0x0

DEBUG 11: earlyremoval

0x004115ec:211: u0x10000015(0x004115ec:211) = ESP(0x004115ec:a3)

0x004115ec:211: **

DEBUG 12: earlyremoval

0x004115ec:2e4: u0x100000a0(0x004115ec:2e4) = #0xfffffffc

0x004115ec:2e4: **

DEBUG 13: addmultcollapse

0x004115ec:a3: ESP(0x004115ec:a3) = ESP(0x004115cd:86) + #0xfffffffc

0x004115ec:a3: ESP(0x004115ec:a3) = ESP(0x004115aa:26a) + #0xfffffffc

DEBUG 14: identityel

0x004115ec:200: ESP(0x004115ec:200) = ESP(0x004115cd:86) + #0x0

0x004115ec:200: ESP(0x004115ec:200) = ESP(0x004115cd:86)

DEBUG 15: multicollapse

0x004115ec:a3: ESP(0x004115ec:a3) = ESP(0x004115aa:26a) + #0xfffffffc

0x004115ec:a3: ESP(0x004115ec:a3) = ESP(0x00411574:4b) + #0xfffffffc

DEBUG 16: addmultcollapse

0x004115ec:a3: ESP(0x004115ec:a3) = ESP(0x00411574:4b) + #0xfffffffc

0x004115ec:a3: ESP(0x004115ec:a3) = ESP(i) + #0xfffffe94

DEBUG 17: earlyremoval

0x004115ec:200: ESP(0x004115ec:200) = ESP(0x004115cd:86)

0x004115ec:200: **

DEBUG 18: stackptrflow

0x004115ec:a3: ESP(0x004115ec:a3) = ESP(i) + #0xfffffe94

0x004115ec:a3: ESP(0x004115ec:a3) = ESP(i) + #0xfffffe94

DEBUG 19: storevarnode

0x004115ec:a4: *(ram,ESP(0x004115ec:a3)) = #0x4115f1

0x004115ec:a4: s0xfffffe94(0x004115ec:a4) = #0x4115f1

DEBUG 20: loadvarnode

0x004115ec:212: u0x10000019:1(0x004115ec:212) = *(ram,ESP(0x004115ec:a3))

0x004115ec:212: **

0x004115ec:a5: EAX(0x004115ec:a5) = call fCls3:8(free)(u0x00007a80(0x004115e6:a1),u0x10000019:1(0x004115ec:212))

0x004115ec:a5: EAX(0x004115ec:a5) = call fCls3:8(free)(u0x00007a80(0x004115e6:a1))

DEBUG 21: heritage

0x004115ec:2fc: **

0x004115ec:2fc: r0x0041a048(0x004115ec:2fc) = r0x0041a048(0x004115c8:2fb) [] i0x004115ec:a5:8(free)

0x004115ec:312: **

0x004115ec:312: s0xfffffe80(0x004115ec:312) = s0xfffffe80(0x004115c8:311) [] i0x004115ec:a5:8(free)

0x004115ec:325: **

0x004115ec:325: s0xfffffe84(0x004115ec:325) = s0xfffffe84(0x004115c8:324) [] i0x004115ec:a5:8(free)

0x004115ec:338: **

0x004115ec:338: s0xfffffe88(0x004115ec:338) = s0xfffffe88(0x004115c8:337) [] i0x004115ec:a5:8(free)

0x004115ec:34b: **

0x004115ec:34b: s0xfffffe8c(0x004115ec:34b) = s0xfffffe8c(0x004115c8:34a) [] i0x004115ec:a5:8(free)

0x004115ec:35e: **

0x004115ec:35e: s0xfffffe90(0x004115ec:35e) = s0xfffffe90(0x004115c8:35d) [] i0x004115ec:a5:8(free)

0x004115ec:371: **

0x004115ec:371: s0xfffffe94(0x004115ec:371) = s0xfffffe94(0x004115ec:a4) [] i0x004115ec:a5:8(free)

0x004115ec:384: **

0x004115ec:384: s0xfffffe98(0x004115ec:384) = s0xfffffe98(0x004115c8:383) [] i0x004115ec:a5:8(free)

0x004115ec:397: **

0x004115ec:397: s0xfffffe9c(0x004115ec:397) = s0xfffffe9c(0x004115c8:396) [] i0x004115ec:a5:8(free)

0x004115ec:3aa: **

0x004115ec:3aa: s0xfffffea0(0x004115ec:3aa) = s0xfffffea0(0x004115c8:3a9) [] i0x004115ec:a5:8(free)

0x004115ec:3bd: **

0x004115ec:3bd: s0xfffffea4(0x004115ec:3bd) = s0xfffffea4(0x004115c8:3bc) [] i0x004115ec:a5:8(free)

0x004115ec:3d0: **

0x004115ec:3d0: s0xfffffea8(0x004115ec:3d0) = s0xfffffea8(0x004115c8:3cf) [] i0x004115ec:a5:8(free)

0x004115ec:3e3: **

0x004115ec:3e3: s0xfffffeb0(0x004115ec:3e3) = s0xfffffeb0(0x004115c8:3e2) [] i0x004115ec:a5:8(free)

0x004115ec:3f6: **

0x004115ec:3f6: s0xfffffebc(0x004115ec:3f6) = s0xfffffebc(0x004115c8:3f5) [] i0x004115ec:a5:8(free)

0x004115ec:409: **

0x004115ec:409: s0xfffffec8(0x004115ec:409) = s0xfffffec8(0x004115c8:408) [] i0x004115ec:a5:8(free)

0x004115ec:41c: **

0x004115ec:41c: s0xfffffed4(0x004115ec:41c) = s0xfffffed4(0x004115d0:8f) [] i0x004115ec:a5:8(free)

0x004115ec:42f: **

0x004115ec:42f: s0xfffffee0(0x004115ec:42f) = s0xfffffee0(0x004115c8:42e) [] i0x004115ec:a5:8(free)

0x004115ec:442: **

0x004115ec:442: s0xfffffeec(0x004115ec:442) = s0xfffffeec(0x004115c8:441) [] i0x004115ec:a5:8(free)

0x004115ec:455: **

0x004115ec:455: s0xfffffef8(0x004115ec:455) = s0xfffffef8(0x004115c8:454) [] i0x004115ec:a5:8(free)

0x004115ec:468: **

0x004115ec:468: s0xffffff04(0x004115ec:468) = s0xffffff04(0x004115c8:467) [] i0x004115ec:a5:8(free)

0x004115ec:47b: **

0x004115ec:47b: s0xffffffd0(0x004115ec:47b) = s0xffffffd0(0x004115c8:47a) [] i0x004115ec:a5:8(free)

0x004115ec:48e: **

0x004115ec:48e: s0xffffffdc(0x004115ec:48e) = s0xffffffdc(0x004115c8:48d) [] i0x004115ec:a5:8(free)

0x004115ec:4a1: **

0x004115ec:4a1: s0xffffffe8(0x004115ec:4a1) = s0xffffffe8(0x004115c8:4a0) [] i0x004115ec:a5:8(free)

0x004115ec:4b4: **

0x004115ec:4b4: s0xfffffff0(0x004115ec:4b4) = s0xfffffff0(0x004115c8:4b3) [] i0x004115ec:a5:8(free)

0x004115ec:4c7: **

0x004115ec:4c7: s0xfffffff4(0x004115ec:4c7) = s0xfffffff4(0x004115c8:4c6) [] i0x004115ec:a5:8(free)

0x004115ec:4da: **

0x004115ec:4da: s0xfffffff8(0x004115ec:4da) = s0xfffffff8(0x004115d6:92) [] i0x004115ec:a5:8(free)

0x004115ec:4ed: **

0x004115ec:4ed: s0xfffffffc(0x004115ec:4ed) = s0xfffffffc(0x004115c8:4ec) [] i0x004115ec:a5:8(free)

DEBUG 22: deadcode

0x004115ec:a3: ESP(0x004115ec:a3) = ESP(i) + #0xfffffe94

0x004115ec:a3: **

0x004115ec:312: s0xfffffe80(0x004115ec:312) = s0xfffffe80(0x004115c8:311) [] i0x004115ec:a5:8(free)

0x004115ec:312: **

0x004115ec:325: s0xfffffe84(0x004115ec:325) = s0xfffffe84(0x004115c8:324) [] i0x004115ec:a5:8(free)

0x004115ec:325: **

0x004115ec:338: s0xfffffe88(0x004115ec:338) = s0xfffffe88(0x004115c8:337) [] i0x004115ec:a5:8(free)

0x004115ec:338: **

0x004115ec:34b: s0xfffffe8c(0x004115ec:34b) = s0xfffffe8c(0x004115c8:34a) [] i0x004115ec:a5:8(free)

0x004115ec:34b: **

0x004115ec:397: s0xfffffe9c(0x004115ec:397) = s0xfffffe9c(0x004115c8:396) [] i0x004115ec:a5:8(free)

0x004115ec:397: **

0x004115ec:3aa: s0xfffffea0(0x004115ec:3aa) = s0xfffffea0(0x004115c8:3a9) [] i0x004115ec:a5:8(free)

0x004115ec:3aa: **

0x004115ec:3bd: s0xfffffea4(0x004115ec:3bd) = s0xfffffea4(0x004115c8:3bc) [] i0x004115ec:a5:8(free)

0x004115ec:3bd: **

0x004115ec:409: s0xfffffec8(0x004115ec:409) = s0xfffffec8(0x004115c8:408) [] i0x004115ec:a5:8(free)

0x004115ec:409: **

0x004115ec:42f: s0xfffffee0(0x004115ec:42f) = s0xfffffee0(0x004115c8:42e) [] i0x004115ec:a5:8(free)

0x004115ec:42f: **

0x004115ec:442: s0xfffffeec(0x004115ec:442) = s0xfffffeec(0x004115c8:441) [] i0x004115ec:a5:8(free)

0x004115ec:442: **

0x004115ec:455: s0xfffffef8(0x004115ec:455) = s0xfffffef8(0x004115c8:454) [] i0x004115ec:a5:8(free)

0x004115ec:455: **

0x004115ec:468: s0xffffff04(0x004115ec:468) = s0xffffff04(0x004115c8:467) [] i0x004115ec:a5:8(free)

0x004115ec:468: **

0x004115ec:47b: s0xffffffd0(0x004115ec:47b) = s0xffffffd0(0x004115c8:47a) [] i0x004115ec:a5:8(free)

0x004115ec:47b: **

0x004115ec:48e: s0xffffffdc(0x004115ec:48e) = s0xffffffdc(0x004115c8:48d) [] i0x004115ec:a5:8(free)

0x004115ec:48e: **

0x004115ec:4ed: s0xfffffffc(0x004115ec:4ed) = s0xfffffffc(0x004115c8:4ec) [] i0x004115ec:a5:8(free)

0x004115ec:4ed: **

DEBUG 23: indirectcollapse

0x004115ec:384: s0xfffffe98(0x004115ec:384) = s0xfffffe98(0x004115c8:383) [] i0x004115ec:a5:8(free)

0x004115ec:384: s0xfffffe98(0x004115ec:384) = s0xfffffe98(0x00411563:3d) [] i0x004115ec:a5:8(free)

DEBUG 24: propagatecopy

0x004115ec:a5: EAX(0x004115ec:a5) = call fCls3:8(free)(u0x00007a80(0x004115e6:a1))

0x004115ec:a5: EAX(0x004115ec:a5) = call fCls3:8(free)(EAX(0x004115c8:83))

DEBUG 25: earlyremoval

0x004115ec:35e: s0xfffffe90(0x004115ec:35e) = s0xfffffe90(0x004115c8:35d) [] i0x004115ec:a5:8(free)

0x004115ec:35e: **

DEBUG 26: earlyremoval

0x004115ec:371: s0xfffffe94(0x004115ec:371) = s0xfffffe94(0x004115ec:a4) [] i0x004115ec:a5:8(free)

0x004115ec:371: **

DEBUG 27: indirectcollapse

0x004115ec:384: s0xfffffe98(0x004115ec:384) = s0xfffffe98(0x00411563:3d) [] i0x004115ec:a5:8(free)

0x004115ec:384: **

DEBUG 28: propagatecopy

0x004115ec:41c: s0xfffffed4(0x004115ec:41c) = s0xfffffed4(0x004115d0:8f) [] i0x004115ec:a5:8(free)

0x004115ec:41c: s0xfffffed4(0x004115ec:41c) = EAX(0x004115c8:83) [] i0x004115ec:a5:8(free)

DEBUG 29: earlyremoval

0x004115ec:a4: s0xfffffe94(0x004115ec:a4) = #0x4115f1

0x004115ec:a4: **

DEBUG 30: setcasts

0x004115ec:50b: **

0x004115ec:50b: EAX(0x004115ec:50b) = (cast) u0x10000129(0x004115ec:a5)

0x004115ec:a5: EAX(0x004115ec:a5) = call fCls3:8(free)(EAX(0x004115c8:50a))

0x004115ec:a5: u0x10000129(0x004115ec:a5) = call fCls3:8(free)(EAX(0x004115c8:50a))

Decompilation completeIn my case, I wanted to know why the function call at 0x4115ec thinks that EAX is being passed as an argument instead of ECX.

Here is ChatGPT's explanation. And it's right! ECX will be equal to the value of EAX at the time of the call.

Useful commands

- Pressing tab will auto-complete the available commands. There isn't a help

command.

list actionshows the plan of actions/passesdebug action foocan enable extra debug info for some actions, such asinputprototype

Sometimes it is necessary to use a bleeding edge Linux kernel such as

drm-intel-next to debug hardware issues. In this post, I'll discuss how to do

this on Ubuntu Jammy.

Ubuntu has a pretty cool automated mainline kernel build

system that also tracks branches like

drm-tip and drm-intel-next. Sadly, it's usually based off whatever Ubuntu

release is under development, and may not be compatible with the most recent LTS

release. This is currently the case with Ubuntu Jammy.

I want to run drm-intel-next, which is available

here. But if you

attempt to install this kernel on Jammy, and have DKMS modules, you'll run into

an error because Jammy's glibc version is too old. The solution is to build the

kernel from scratch. But since there aren't any source debs, and there doesn't

really seem to be any documentation, I always forget how to do this.

So here's how to do it. The first step is that we'll checkout the kernel source from git. Right now, at the top of the drm-intel-next mainlage page it says:

To obtain the source from which they are built fetch the commit below:

git://git.launchpad.net/~ubuntu-kernel-test/ubuntu/+source/linux/+git/mainline-crack cod/mainline/cod/tip/drm-intel-next/2023-10-13

So we'll do that:

~/kernels $ git clone --depth 1 -b cod/mainline/cod/tip/drm-intel-next/2023-10-13 https://git.launchpad.net/~ubuntu-kernel-test/ubuntu/+source/linux/+git/mainline-crack drm-intel-next

Cloning into 'drm-intel-next'...

remote: Enumerating objects: 86818, done.

remote: Counting objects: 100% (86818/86818), done.

remote: Compressing objects: 100% (82631/82631), done.

remote: Total 86818 (delta 10525), reused 21994 (delta 3264)

Receiving objects: 100% (86818/86818), 234.75 MiB | 2.67 MiB/s, done.

Resolving deltas: 100% (10525/10525), done.

Note: switching to '458311d2d5e13220df5f8b10e444c7252ac338ce'.

You are in 'detached HEAD' state. You can look around, make experimental

changes and commit them, and you can discard any commits you make in this

state without impacting any branches by switching back to a branch.

If you want to create a new branch to retain commits you create, you may

do so (now or later) by using -c with the switch command. Example:

git switch -c <new-branch-name>

Or undo this operation with:

git switch -

Turn off this advice by setting config variable advice.detachedHead to false

Updating files: 100% (81938/81938), done.Side note: The --depth 1 will speed the clone up, because we don't really care

about the entire git history.

Next, cd drm-intel-next.

As a sanity check, verify that the debian directory, which contains the Ubuntu

build scripts, is present.

To start, you need to run fakeroot debian/rules clean which will generate a

few files including debian/changelog.

Next, run fakeroot debian/rules binary and wait a few seconds. I got an error:

~/k/drm-intel-next $ fakeroot debian/rules binary

[... snip ...]

make ARCH=x86 CROSS_COMPILE=x86_64-linux-gnu- HOSTCC=x86_64-linux-gnu-gcc-13 CC=x86_64-linux-gnu-gcc-13 KERNELVERSION=6.6.0-060600rc2drmintelnext20231013- CONFIG_DEBUG_SECTION_MISMATCH=y KBUILD_BUILD_VERSION="202310130203" LOCALVERSION= localver-extra= CFLAGS_MODULE="-DPKG_ABI=060600rc2drmintelnext20231013" PYTHON=python3 O=/home/ed/kernels/drm-intel-next/debian/tmp-headers INSTALL_HDR_PATH=/home/ed/kernels/drm-intel-next/debian/linux-libc-dev/usr -j16 headers_install

make[1]: Entering directory '/home/ed/kernels/drm-intel-next'

make[2]: Entering directory '/home/ed/kernels/drm-intel-next/debian/tmp-headers'

HOSTCC scripts/basic/fixdep

/bin/sh: 1: x86_64-linux-gnu-gcc-13: not found

make[4]: *** [/home/ed/kernels/drm-intel-next/scripts/Makefile.host:114: scripts/basic/fixdep] Error 127

make[3]: *** [/home/ed/kernels/drm-intel-next/Makefile:633: scripts_basic] Error 2

make[2]: *** [/home/ed/kernels/drm-intel-next/Makefile:234: __sub-make] Error 2

make[2]: Leaving directory '/home/ed/kernels/drm-intel-next/debian/tmp-headers'

make[1]: *** [Makefile:234: __sub-make] Error 2

make[1]: Leaving directory '/home/ed/kernels/drm-intel-next'

make: *** [debian/rules.d/2-binary-arch.mk:559: install-arch-headers] Error 2Naturally, gcc-13 isn't available on Jammy. This is why I hate computers.

gcc-13 appears hard-coded into the build scripts:

~/k/drm-intel-next $ fgrep -R gcc-13 .

./init/Kconfig:# It's still broken in gcc-13, so no upper bound yet.

./debian/control: gcc-13, gcc-13-aarch64-linux-gnu [arm64] <cross>, gcc-13-arm-linux-gnueabihf [armhf] <cross>, gcc-13-powerpc64le-linux-gnu [ppc64el] <cross>, gcc-13-riscv64-linux-gnu [riscv64] <cross>, gcc-13-s390x-linux-gnu [s390x] <cross>, gcc-13-x86-64-linux-gnu [amd64] <cross>,

./debian/rules.d/0-common-vars.mk:export gcc?=gcc-13

./debian.master/config/annotations:CONFIG_CC_VERSION_TEXT policy<{'amd64': '"x86_64-linux-gnu-gcc-13 (Ubuntu 13.2.0-4ubuntu3) 13.2.0"', 'arm64': '"aarch64-linux-gnu-gcc-13 (Ubuntu 13.2.0-4ubuntu3) 13.2.0"', 'armhf': '"arm-linux-gnueabihf-gcc-13 (Ubuntu 13.2.0-4ubuntu3) 13.2.0"', 'ppc64el': '"powerpc64le-linux-gnu-gcc-13 (Ubuntu 13.2.0-4ubuntu3) 13.2.0"', 'riscv64': '"riscv64-linux-gnu-gcc-13 (Ubuntu 13.2.0-4ubuntu3) 13.2.0"', 's390x': '"s390x-linux-gnu-gcc-13 (Ubuntu 13.2.0-4ubuntu3) 13.2.0"'}>Well, let's change them to gcc-12 and see what happens. Be prepared to wait a lot longer this time...

~/k/drm-intel-next $ sed -i -e 's/gcc-13/gcc-12/g' debian/{control,rules.d/0-common-vars.mk} debian.master/config/annotations

~/k/drm-intel-next $ fakeroot debian/rules binary

[... lots of kernel compilation output ...]

# Compress kernel modules

find debian/linux-image-unsigned-6.6.0-060600rc2drmintelnext20231013-generic -name '*.ko' -print0 | xargs -0 -n1 -P 16 zstd -19 --quiet --rm

stdout is a console, aborting

make: *** [debian/rules.d/2-binary-arch.mk:622: binary-generic] Error 123Well, that's annoying. It seems that the general build logic calls zstd to

compress any found files. But if none are found, then the rule fails. Perhaps

newer versions of zstd don't fail when called on no arguments. Anyway, apply

the following patch to use xargs -r to fix this:

--- a/debian/rules.d/2-binary-arch.mk

+++ b/debian/rules.d/2-binary-arch.mk

@@ -568,7 +568,7 @@ define dh_all

dh_installdocs -p$(1)

dh_compress -p$(1)

# Compress kernel modules

- find debian/$(1) -name '*.ko' -print0 | xargs -0 -n1 -P $(CONCURRENCY_LEVEL) zstd -19 --quiet --rm

+ find debian/$(1) -name '*.ko' -print0 | xargs -r -0 -n1 -P $(CONCURRENCY_LEVEL) zstd -19 --quiet --rm

dh_fixperms -p$(1) -X/boot/

dh_shlibdeps -p$(1) $(shlibdeps_opts)

dh_installdeb -p$(1)Re-run fakeroot debian/rules binary and if all goes well, you should end up

with a bunch of .deb files in the parent directory, and something like:

~/k/drm-intel-next $ fakeroot debian/rules binary

[... lots of kernel compilation output ...]

dpkg-deb: building package 'linux-source-6.6.0' in '../linux-source-6.6.0_6.6.0-060600rc2drmintelnext20231013.202310130203_all.deb'.

dpkg-deb: building package 'linux-headers-6.6.0-060600rc2drmintelnext20231013' in '../linux-headers-6.6.0-060600rc2drmintelnext20231013_6.6.0-060600rc2drmintelnext20231013.202310130203_all.deb'.

dpkg-deb: building package 'linux-tools-common' in '../linux-tools-common_6.6.0-060600rc2drmintelnext20231013.202310130203_all.deb'.

dpkg-deb: building package 'linux-cloud-tools-common' in '../linux-cloud-tools-common_6.6.0-060600rc2drmintelnext20231013.202310130203_all.deb'.

dpkg-deb: building package 'linux-tools-host' in '../linux-tools-host_6.6.0-060600rc2drmintelnext20231013.202310130203_all.deb'.

dpkg-deb: building package 'linux-doc' in '../linux-doc_6.6.0-060600rc2drmintelnext20231013.202310130203_all.deb'.Now install the desired packages with sudo dpkg -i <paths to .deb files>.

Some days I hate computers. Today is one of those days. My work computer froze over the weekend (which is another long, frustrating story that I won't go into right now), so I had to reboot. As usual, I logged into our Pulse Secure VPN, and opened up Chrome. And Chrome can't resolve anything. I can't get to regular internet sites or intranet sites. What the heck?

My first thought is that this is somehow proxy related. But no, even when disabling the proxy, I still can't resolve internal hostnames.

But tools like dig and ping work. I open up Firefox, and that works too.

OK, that's weird. I open up chrome://net-internals/#dns in Chrome and confirm

that it can't resolve anything. I try flushing the cache, but that doesn't

work. I try a few other things, like disabling DNS prefetching and safe

browsing, but none of those help either.

I take a look at /etc/resolv.conf, which contains a VPN DNS server presumably

added by Pulse Secure, and 127.0.0.53 for the systemd-resolved resolver. I

confirm that resolvectl does not know about the Pulse Secure DNS server. I

add it manually with resolvectl dns tun0 <server>, and Chrome starts working

again. OK, well that's good. But how do we fix it permanently?

This seems relevant: PulseSecure VPN does not work with

systemd-resolved. Oh, maybe

not. The "fix" is to publish documentation that the Pulse Secure developers

should read. Sigh. After reading more closely, I see something about the

resolvconf command, which they do already support. I don't seem to have that

command, but that is easily fixed by a apt install resolvconf, and I confirm

that after reconnecting to the VPN, systemd-resolved knows of the VPN DNS

servers. And Chrome works. Yay!

So what happened that this suddenly became a problem? I'm not sure. One

possibility is that Chrome started ignoring /etc/resolv.conf and directly

using systemd-resolved if it appears to be available.

I really hate when my computer stops working, so I hope that if you are affected by this problem and find this blog post, it helps you out.

I've been feeling left behind by all the exciting news surrounding AI, so I've been quietly working on a project to get myself up to speed with some modern Transformers models for NLP. This project is mostly a learning exercise, but I'm sharing it in the hopes that it is still interesting!

Background: Functions, Methods and OOAnalyzer

One of my favorite projects is OOAnalyzer, which is part of SEI's Pharos static binary analysis framework. As the name suggests, it is a binary analysis tool for analyzing object-oriented (OO) executables to learn information about their high level structure. This structure includes classes, the methods assigned to each class, and the relationships between classes (such as inheritance). What's really cool about OOAnalyzer is that it is built on rules written in Prolog -- yes, Prolog! So it's both interesting academically and practically; people do use OOAnalyzer in practice. For more information, check out the original paper or a video of my talk.

Along the way to understanding a program's class hierarchy, OOAnalyzer needs to solve many smaller problems. One of these problems is: Given a function in an executable, does this function correspond to an object-oriented method in the source code? In my humble opinion, OOAnalyzer is pretty awesome, but one of the yucky things about it is that it contains many hard-coded assumptions that are only valid for Microsoft Visual C++ on 32-bit x86 (or just x86 MSVC to save some space!)

It just so happens that on

this compiler and platform, most OO methods use the thiscall calling

convention, which passes a pointer to the this object in the ecx register. Below is an interactive Godbolt example that shows a simple method mymethod being compiled by x86 MSVC. You can see on line 7 that the thisptr is copied from ecx onto the stack at offset -4. On line 9, you can see that arg is passed on the stack. In contrast, on line 20, myfunc only receives its argument on the stack, and does not access ecx.

(Note that this assembly code comes from the compiler and includes the name of functions, which makes it obvious whether a function corresponds to an OO method. Unfortunately this is not the case when reverse engineering without access to source code!)

Because most non-thisptr arguments are passed on the stack in x86 MSVC, but the thisptr is passed in ecx, seeing an argument in the ecx register is highly suggestive (but not definitive) that the function corresponds to an OO

method. OOAnalyzer has a variety of heuristics based on this notion that tries

to determine whether a function corresponds to an OO method. These work well,

but they're specific to x86 MSVC. What if we wanted to

generalize to other compilers? Maybe we could learn to do that. But first,

let's see if we can learn to do this for x86 MSVC.

Learning to the Rescue

Let's play with some machine learning!

Step 1: Create a Dataset

You can't learn without data, so the first thing I had to do was create a dataset. Fortunately, I already had a lot of useful tools and projects for generating ground truth about OO programs that I could reuse.

BuildExes

The first project I used was BuildExes. This is a project that takes several test programs that are distributed as part of OOAnalyzer and builds them with many versions of MSVC and a variety of compiler options. The cute thing about BuildExes is that it uses Azure pipelines to install different versions of MSVC using the Chocolatey package manager and perform the compilations. Otherwise we'd have to install eight MSVC versions, which sounds like a pain to me. BuildExes uses a mix of publicly available Chocolatey packages and some that I created for older versions of MSVC that no one else cares about 🤣.

When BuildExes runs on Azure pipelines, it produces an artifact consisting of a large number of executables that I can use as my dataset.

Ground Truth

As part of our evaluations for the OOAnalyzer paper, we wrote a variety of scripts that extracted ground truth information out of PDB debugging symbols files (which, conveniently, are also included in the BuildExes artifact!) These scripts aren't publicly available, but they aren't top secret and we've shared them with other researchers. They essentially call a tool to decode PDB files into a textual representation and then parse the results.

Putting it Together

Here is the script that produces the ground truth

dataset.

It's a bit obscure, but it's not very complicated. Basically, for each executable in the BuildExes

project, it reads the ground truth file, and also uses the bat-dis

tool from the ROSE Binary

Analysis Framework to

disassemble the program.

The initial dataset is available on 🤗 HuggingFace. Aside: I love that 🤗 HuggingFace lets you browse datasets to see what they look like.

Step 2: Split the Data

The next step is to split the data into training and test sets. In ML, the best practice is generally to ensure that there is no overlap between the training and test sets. This is so that the performance of a model on the test set represents performance on "unseen examples" that the model has not been trained on. But this is tricky for a few reasons.

First, software is natural, which means that we can expect that distinct programmers will naturally write the same code over and over again. If a function is independently written multiple times, should it really count as an "previously seen example"? Unfortunately, we can't distinguish when a function is independently written multiple times or simply copied. So when we encounter a duplicate function, what should we do? Discard it entirely? Allow it to be in both the training and test sets? There is a related question for functions that are very similar. If two functions only differ by an identifier name, would knowledge of one constitute knowledge of the other?

Second, compilers introduce a lot of functions that are not written by the programmer, and thus are very, very common. If you look closely at our dataset, it is actually dominated by compiler utility functions and functions from the standard library. In a real-world setting, it is probably reasonable to assume that an analyst has seen these before. Should we discard these, or allow them to be in both the training and test sets, with the understanding that they are special in some way?

Constructing a training and test split has to take into account these questions. I actually created an additional dataset that splits the data via different mechanisms.

I think the most intuitively correct one is what I call splitting by library

functions. The idea is quite simple. If a function name appears in the

compilation of more than one test program, we call it a library function. For

example, if we compile oo2.cpp and oo3.cpp to oo2.exe and oo3.exe

respectively, and both executables contain a function called

msvc_library_function, then that function is probably introduced by the

compiler or standard library, and we call it a library function. If function

oo2_special_fun only appears in oo2.exe and no other executables, then we

call it a non-library function. We then split the data into training and test

sets such that the training set consists only of library functions, and the test

set consists only of non-library functions. In essence, we train on the commonly

available functions, and test on the rare ones.

This idea isn't perfect, but it works pretty well, and it's easy to understand and justify. You can view this split here.

Step 3: Fine-tune a Model

Now that we have a dataset, we can fine-tune a model. I used the huggingface/CodeBERTa-small-v1 model on 🤗 HuggingFace as a base model. This is a model that was trained on a large corpus of code, but that is not trained on assembly code (and we will see some evidence of this).

My model, which I fine-tuned with the training split using the "by library" method described above, is available as ejschwartz/oo-method-test-model-bylibrary. It attains 94% accuracy on the test set, which I thought was pretty good. I suspect that OOAnalyzer performs similarly well.

If you are familiar with the 🤗 Transformers library, it's actually quite easy to use my model (or someone else's). You can actually click on the "Use in Transformers" button on the model page, and it will show you the Python code to use. But if you're a mere mortal, never fear, as I've created a Space for you to play with, which is embedded here:

If you want, you can upload your own x86 MSVC executable. But if you don't

have one of those lying around, you can just click on one of the built-in example

executables at the bottom of the space (ooex8.exe and

ooex9.exe). From there, you can select a function from the dropdown menu to

see its disassembly, and the model's opinion of whether it is a class method or

not. If the assembly code is too long for the model to process, you'll currently encounter an error.

Here's a recording of what it looks like:

If you are very patient, you can also click on "Interpret" to run Shapley interpretation, which will show which tokens are contributing the most. But it is slow. Very slow. Like five minutes slow. It also won't give you any feedback or progress (sigh -- blame gradio, not me).

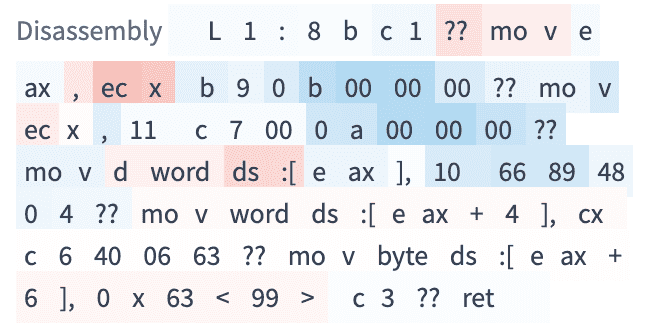

Here's an example of an interpretation. The dark red tokens contribute more to

method-ness and dark blue tokens contribute to function-ness. You can also see that

registers names are not tokens in the original CodeBERT model. For instance, ecx

and is split into ec and x, which the model learns to treat as a pair. It's

not a coincidence that the model learned that ecx is indicative of a method,

as this is a rule used in OOAnalyzer as well. It's also somewhat interesting that the model views sequences of zero bytes in the binary code as being indicative of function-ness.

Looking Forward

Now that we've shown we can relearn a heuristic that is already included in OOAnalyzer, this raises a few other questions:

-

One of the "false positives" of the

ecxheuristics is thefastcallcalling convention, which also uses theecxregister. We should ensure that the dataset contains functions with this calling convention (and others), since they do occur in practice. -

What other parts of OOAnalyzer can we learn from examples? How does the accuracy of learned properties compare to the current implementation?

-

Can we learn to distinguish functions and methods for other architectures and compilers? Unfortunately, one bottleneck here is that our ground truth scripts only work for MSVC. But this certainly isn't an insurmountable problem.

I've mentioned a few times that I'm an avid skier. I live in central Pennsylvania though, and so the skiing is not always the best, to say the least!

I've been going to the BLISTER Summit in Crested Butte for the past two years now, and it's been a blast. The summit defies categorization; it's a cross between a huge ski demo, meet-up, and panel sessions. It's a great way to try out new gear, ski on an incredible mountain, and ski with some really awesome people, including Wendy Fisher, Chris Davenport, Drew Petersen and the BLISTER team.

Anyway, I mostly wanted to share a few fun pictures from the event. Somehow I made it into the promo video for next year's event! Sadly, it was not because of my epic skiing:

Pictures

I've been looking for a good excuse to post some pictures. So, here you go!

My family and I had quite the day attending the 2023 Central Pennsylvania Open Source Conference (CPOSC) yesterday at the Ware Center in Lancaster, PA. In addition to attending, we spoke in three different talks!

My wife, Dr. Stephanie Schwartz, kicked things off.

Next, my step son Nick Elzer, along with his coworker from Quub, Nathaniel Every, gave his first ever public conference presentation, and did a great job!

Started the open source repo for our space hardware development platform https://t.co/cRzHZkM6Gg

— Nathaniel Evry (@NathanielEvry) April 1, 2023

Had a blast presenting with @Nick_elzer for @quubspace!

Thank you @CPOSC for having us! pic.twitter.com/eRoHm5ea2u

Finally, I gave a tutorial presentation called "Introduction to Exploiting Stack Buffer Overflow Vulnerabilities". If you want, you can follow along here and the videos below.

After a few years of hiatus, it was great to have CPOSC 2023 be back in person again. I saw a number of great presentations, and met a lot of interesting and smart people. It's always surprising how many technical people work in an area that is known for its rural farming!

Videos

Last December, I did most of Advent of Code in Rust, which I had never used before. You can find my solutions here.

The Good

Modern syntax with LLVM codegen

I tend to program functionally, perhaps even excessively so. I try to express

most concepts through map, filter, and fold. I tend to enjoy languages

that make this easy. Fortunately, this is becoming the norm, even in

non-functional languages such as Python, Java and C++.

Perhaps it is not too surprising then that Rust, as a new language, supports this style of programming as well:

let x: i32 = (1..42).map(|x| x+1).sum();

println!("x: {x}");What is truly amazing about Rust though is how this function code is

compiled to x86-64. At optimiation level 1, the computation of x evaluates to

mov dword ptr [rsp + 4], 902

lea rax, [rsp + 4]Yes, the compiler is able to unfold and simplify the entire computation, which is pretty neat. But let's look at the code at optimization level 0:

mov ecx, 1

xor eax, eax

mov dl, 1

.LBB5_1:

.Ltmp27:

movzx edx, dl

and edx, 1

add edx, ecx

.Ltmp28:

add eax, ecx

inc eax

mov ecx, edx

.Ltmp29:

cmp edx, 42

setb dl

.Ltmp30:

jb .LBB5_1

.Ltmp31:

sub rsp, 72

mov dword ptr [rsp + 4], eax

lea rax, [rsp + 4]So our functional computation of a range, a map, and a sum (which is a reduce)

is compiled into a pretty simple loop. And keep in mind this is at optimization

level 0.

By contrast, let's take a look at how OCaml handles this. First, the included OCaml standard library is not so great, so writing the program is more awkward:

let r = List.init 42 (fun x -> x + 1) in

let x = List.map (fun x -> x+1) r in

let x = List.fold_left (+) 0 x in

Printf.printf "x: %x\n" xBut let's look at the assembly with aggressive optimizations:

camlExample__entry:

leaq -328(%rsp), %r10

cmpq 32(%r14), %r10

jb .L122

.L123:

subq $8, %rsp

.L121:

movl $85, %ebx

movl $5, %eax

call camlExample__init_aux_432@PLT

.L124:

call caml_alloc2@PLT

.L125:

leaq 8(%r15), %rsi

movq $2048, -8(%rsi)

movq $5, (%rsi)

movq %rax, 8(%rsi)

movq camlExample__Pmakeblock_arg_247@GOTPCREL(%rip), %rdi

movq %rsp, %rbp

movq 56(%r14), %rsp

call caml_initialize@PLT

movq %rbp, %rsp

movq camlExample__Pmakeblock_arg_247@GOTPCREL(%rip), %rax

movq (%rax), %rax

call camlExample__map_503@PLT

.L126:

call caml_alloc2@PLT

.L127:

leaq 8(%r15), %rsi

movq $2048, -8(%rsi)

movq $5, (%rsi)

movq %rax, 8(%rsi)

movq camlExample__x_77@GOTPCREL(%rip), %rdi

movq %rsp, %rbp

movq 56(%r14), %rsp

call caml_initialize@PLT

movq %rbp, %rsp

movq camlExample__x_77@GOTPCREL(%rip), %rax

movq (%rax), %rax

movq 8(%rax), %rbx

movl $5, %eax

call camlExample__fold_left_558@PLT

.L128:

movq camlExample__x_75@GOTPCREL(%rip), %rdi

movq %rax, %rsi

movq %rsp, %rbp

movq 56(%r14), %rsp

call caml_initialize@PLT

movq %rbp, %rsp

movq camlExample__const_block_49@GOTPCREL(%rip), %rdi

movq camlExample__Pmakeblock_637@GOTPCREL(%rip), %rbx

movq camlStdlib__Printf__anon_fn$5bprintf$2eml$3a20$2c14$2d$2d48$5d_409_closure@GOTPCREL(%rip), %rax

call camlCamlinternalFormat__make_printf_4967@PLT

.L129:

movq camlExample__full_apply_240@GOTPCREL(%rip), %rdi

movq %rax, %rsi

movq %rsp, %rbp

movq 56(%r14), %rsp

call caml_initialize@PLT

movq %rbp, %rsp

movq camlExample__full_apply_240@GOTPCREL(%rip), %rax

movq (%rax), %rbx

movq camlExample__x_75@GOTPCREL(%rip), %rax

movq (%rax), %rax

movq (%rbx), %rdi

call *%rdi

.L130:

movl $1, %eax

addq $8, %rsp

ret

.L122:

push $34

call caml_call_realloc_stack@PLT

popq %r10

jmp .L123The Bad

Lambda problem

In general, the Rust compiler's error messages are quite helpful. This is important, because dealing (fighting) with the borrow checker is a frequence occurrence. Unfortunately, there are some cases that, despite a lot of effort, I still don't really understand.

Here is a problem that I posted on stack overflow. It's a bit contrived, but it happened because I had a very functional solution to part 1 of an Advent of Code problem. The easiest way to solve the second part was to add a mutation.

Here is the program:

fn main(){

let v1=vec![1];

let v2=vec![3];

let mut v3=vec![];

v1.iter().map(|x|{

v2.iter().map(|y|{

v3.push(*y);

})

});

}And here is the error:

error: captured variable cannot escape `FnMut` closure body

--> src/main.rs:6:5

|

4 | let mut v3=vec![];

| ------ variable defined here

5 | v1.iter().map(|x|{

| - inferred to be a `FnMut` closure

6 | / v2.iter().map(|y|{

7 | | v3.push(*y);

| | -- variable captured here

8 | | })

| |______^ returns a reference to a captured variable which escapes the closure bodyThe suggestions I received on stack overflow were basically "use loops". This was very disappointing for an example where the closures' scopes are clearly limited.

Anyway, it's still early days for Rust, so I hope that problems like this will be improved over time. Overall, it seems like a great language for doing systems development, but I still think a garbage collected language is better for daily driving.

Right before the holidays, I, along with my co-authors of the journal article The Art, Science, and Engineering of Fuzzing: A Survey, received an early holiday present!

Congratulations!

On behalf of Vice President for Publications, David Ebert, I am writing to inform you that your paper, "The Art, Science, and Engineering of Fuzzing: A Survey," has been awarded the 2021 Best Paper Award from IEEE Transactions on Software Engineering by the IEEE Computer Society Publications Board.

This was quite unexpected, as our article was accepted back in 2019 -- four years ago! But it only "appeared" in the November 2021 editions of the journal.

You can access this article here or, as always, on my publications page.

It's been an exciting year so far. I'm happy to announce that two papers I co-authored received awards. Congratulations to the students who did all the heavy lifting -- Jeremy, Qibin, and Alex!

Distinguished Paper Award: Augmenting Decompiler Output with Learned Variable Names and Types

Qibin Chen, Jeremy Lacomis, Edward J. Schwartz, Claire Le Goues, Graham Neubig, and Bogdan Vasilescu. Augmenting Decompiler Output with Learned Variable Names and Types, (PDF) Proceedings of the 2022 USENIX Security Symposium. Received distinguished paper award.

This paper follows up on some of our earlier work in which we show how to improve decompiler. Decompiler output is often substantially more readable compared to the lower-level alternative of reading disassembly code. But decompiler output still has a lot of shortcomings when it comes to information that is removed during the compilation process, such as variable names and type information. In our previous work, we showed that it is possible to recover meaningful variable names by learning appropriate variable names based on the context of the surrounding code.

In the new paper, Jeremy, Qibin and my coauthors explored whether it

is also possible to recover high-level types via learning. There is a

rich history of binary analysis work in the area of type inference,

but this work generally focuses on syntactic types, such as struct

{float; float}. These type inference algorithms are generally already

built into decompilers. In our paper, we try to recover semantic

types, such as struct {float x; float y} point which includes the

type and field names, which are more valuable to a reverse engineer.

It turns out that we can recover semantic types even more accurately

than variable names. This is in part because types are constrained by

the way in which they are used. For example, an int can't be

confused with a char because they are different sizes.

Best Paper Award: Learning to Superoptimize Real-world Programs

Alex Shypula, Pengcheng Yin, Jeremy Lacomis, Claire Le Goues, Edward Schwartz, and Graham Neubig. Learning to Superoptimize Real-world Programs, (Arxiv) (PDF) Proceedings of the 2022 Deep Learning for Code Workshop at the International Conference on Learning Representations. Received best paper award.

In this paper, Alex and our co-authors investigate whether neural models are able to learn and improve on optimizations at the assembly code level by looking at unoptimized and optimized code pairings that are generated from an optimizing compiler. The short answer is that they can, and by employing reinforcement learning on top, can learn to outperform an optimizing compiler in some cases! Superoptimization is an interesting problem in its own right, but what really excites me about this paper is it demonstrates that neural models can learn very complex optimizations such as register allocation just by looking at the textual representation of assembly code. The optimizations the model can perform clearly indicate that the model is learning a substantial portion of x86 assembly code semantics merely by looking at examples. To me, this clearly signals that, with the right data, neural models are likely able to solve many binary analysis problems. I look forward to future work in which we combine traditional binary analysis techniques, such as explicit semantic decodings of instructions, with neural learning.

I'm happy to announce that a paper written with my colleagues, A Generic Technique for Automatically Finding Defense-Aware Code Reuse Attacks, will be published at ACM CCS 2020. This paper is based on some ideas I had while I was finishing my degree that I did not have time to finish. Fortunately, I was able to find time to work on it again at SEI, and this paper is the result. A pre-publication copy is available from the publications page.

Powered with by Gatsby 5.0